TRuST Scholarly Network hosts conversations on the transformational impact of artificial intelligence, big data and innovating responsibly.

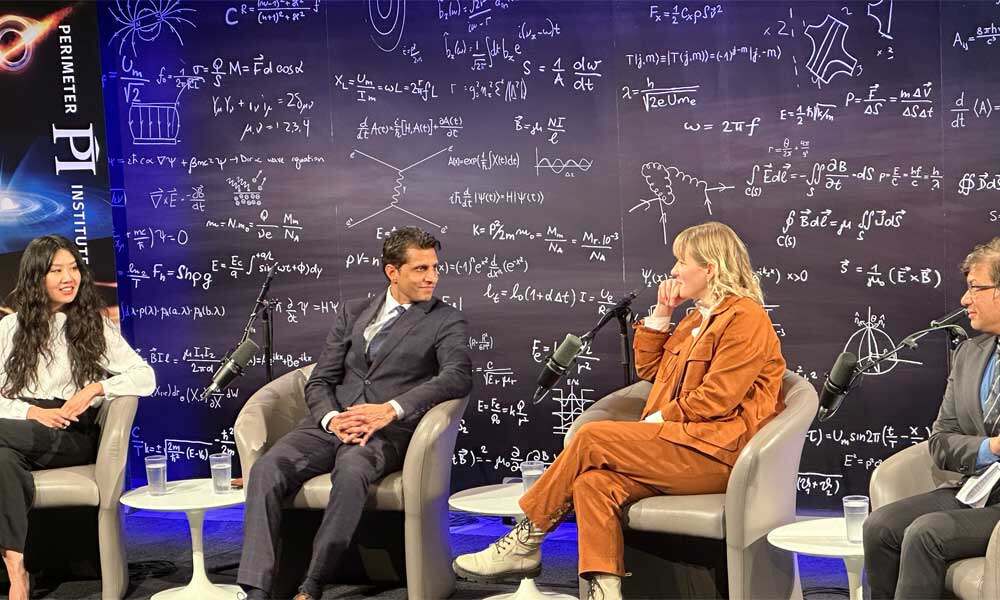

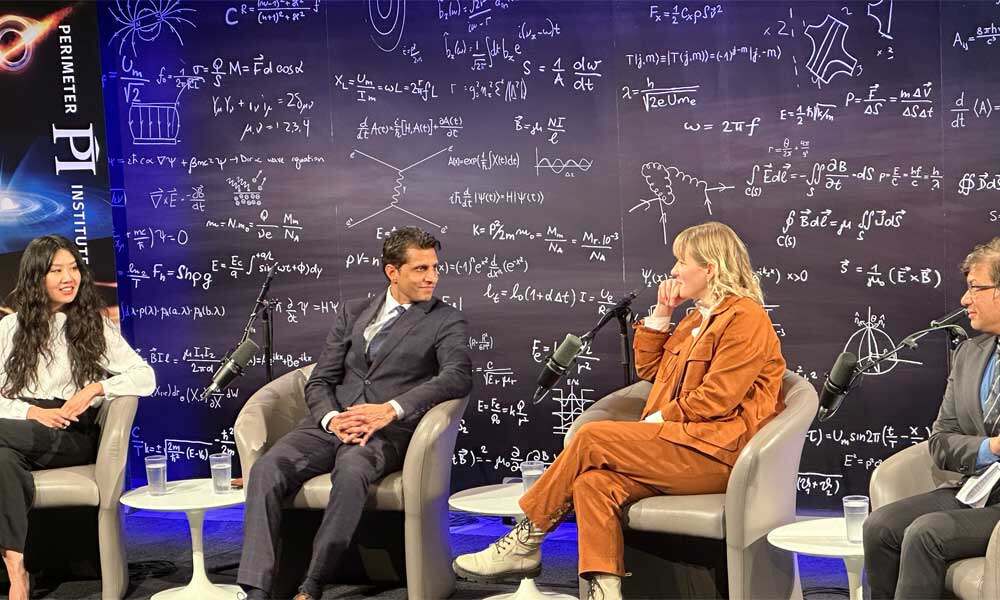

The University of Waterloo, in collaboration with the Perimeter Institute, hosted the TRuST Scholarly Network’s Conversations on Artificial Intelligence (AI), a dynamic and engaging discussion that delved into the societal impact of AI. The event was also supported by the Waterloo AI Institute, which is dedicated to developing human-centered AI for social good, fostering trust with industry partners, and scaling responsible solutions to enhance lives.

With the growing influence and evolution of AI and big data, the event explored the ethical considerations surrounding these technologies and addressed concerns about confidence in and potential risks associated with these technologies — especially when used in research and innovation.

The Trust in Research Undertaken in Science and Technology (TRuST) scholarly network brings together researchers and practitioners from across disciplines to improve communication with the public and build trust in science and technology. TRuST aims to understand the lack of trust in science and technology and to support ethically earning and sustaining trust in these domains.

The panel discussion was moderated by Jenn Smith, the engineering director and WAT site co-lead for Google Canada.

Panel participants were:

- Lai-Tze Fan, professor from Sociology and Legal Studies in the Faculty of Arts and Canada Research Chair in Technology and Social Change

- Makhan Virdi, a NASA researcher specializing in open science and AI in earth science

- Leah Morris, senior director, Velocity Program at Radical Ventures

- Anindya Sen, professor from Economics in the Faculty of Arts and associate director of the Cybersecurity and Privacy Institute

The discussion started by how society can go about trusting in AI when it is using both languages and the knowledge that humanity has built and cultivated for millennia.

Trust, in this context, involves a willingness to be vulnerable — acknowledging the unknown and the aspects of AI that remain not fully understood.

“It centres on open access and accessibility, particularly in relation to AI’s black box nature — both in its creation and the content it delivers,” Fan said. “Achieving this requires increased accountability from developers and the industry, as well as improved governance and regulation. Users, too, need to be realistic about the technology’s limitations.”

The conversation delved into the extremes of dismissing AI as mere random pattern generators or attributing human-like agency and harmful intent to it.

“I believe we have to be realistic about the promise and the perils of this new technology,” Virdi said. “And use it for the betterment of humankind, as we have used knowledge and language for the last so many centuries, balancing different extremes is very important in this discussion.”

A key theme that emerged through the discussion was the application of AI in innovation.

Perspectives on potential benefits of AI, such as drug discovery or medical diagnostics, while underscoring the need for responsible development and data governance. Privacy concerns, data usage and the role of policy and governance in ensuring the safety and security of consumer data became focal points.

“Existing policies are well intentioned, but how much emphasis are we putting on innovation — and we should be putting emphasis on innovation, there are incredible benefits to these technologies — but we must be cognizant of harm,” Sen said. “And there needs to be definitions around liabilities and penalties for when high impact systems cause harm. And we also need to be clear when we define harm because our concept of harm is changing because of AI.”

The bias present in the datasets used to train AI technology also came into focus.

“Transparency is crucial, especially in AI development. This involves revealing what is included, omitted and the perspectives shaping the information,” Fan said. “Training a machine learning system on 150 years of art, for example, may inadvertently exclude diverse perspectives from the past and have a disproportioned western influence.”

“To address this, advocating for equitable and diverse information and data representation starts at the design stage and every single stage after,” Fan continued.

The event featured a question-and-answer session, allowing both in-person and online audience members to join in the conversation. The questions centred around concerns about the lack of tools for ordinary people to negotiate with companies that leverage their data.

This brings attention to the need for improved governance, transparency, understanding terms and conditions and providing individuals with more control over their data with the goal of advancing trust in AI technologies.

After the panel discussion, a reception allowed the audience to have their unanswered questions addressed by the panellists, facilitating continued dialogue on AI and its unmistakable impact on society.

The TRuST Scholarly Network’s Conversations on Artificial Intelligence is just one of a series of events planned to help promote trust in science and technology and engage the public with researchers and industry practitioners. The network believes the first step to earning public trust in science is reflecting on the practices of research and scholarship itself in a critical way, to see how we might improve and move forward more inclusively, more thoughtfully and more collaboratively.

The next TRuST event is scheduled for May 2024 and updates on the scholarly network’s activities can be found on their website.